Kafka actions

Kafka Connector

Kafka Connector is the pluggable, declarative data integration framework for Kafka. It connects data sinks and sources to Kafka, allowing developers to quickly build and deploy data pipelines without having to manually configure each component. It provides an easy-to-use API and a wide variety of connectors to databases, key-value stores, search indexes and file systems. Kafka Connector is designed to be highly available and fault-tolerant and can be deployed in a distributed and scalable mode.

Kafka Connectors are ready-to-use components, which can help us to import data from external systems into Kafka topics and export data from Kafka topics into external systems.

Types of connector

- Client

- Consumer

- Create Topic

- Delete Topic

- Producer

Description about each connector and its flow

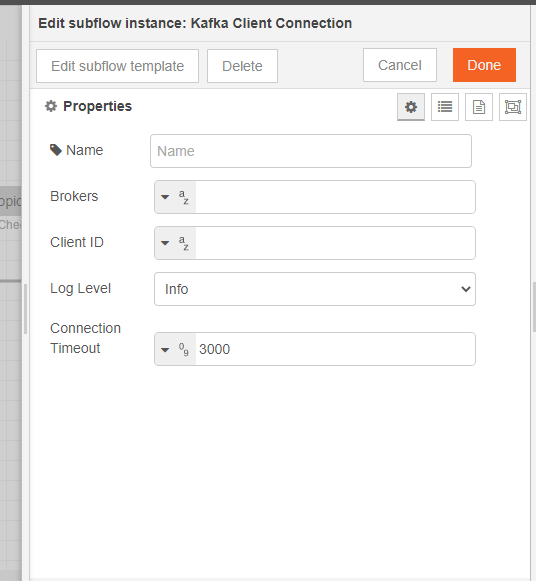

1. Client

- It creates the Kafka Client Connection.

This action initializes the connection configuration.

Following are the parameters which are required to establish connection and below is their respective Description

Name : Give a valid name to establish connection.

Brokers: A comma separate list, for example:

- mybroker1.test.io:9092,mybroker2.test.io:9092,mybroker3.test.io:9092

Client ID

Log Level:

- Info (default)

- Warning

- Debug

- Error

- None

Connection Timeout

default is 3000

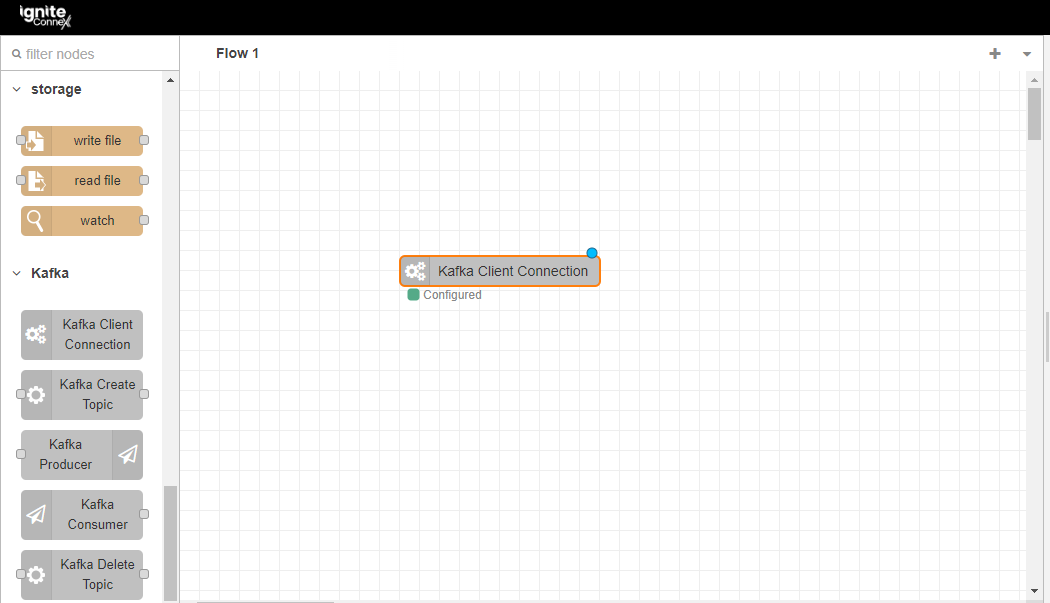

On successful deployment Flow will look like this

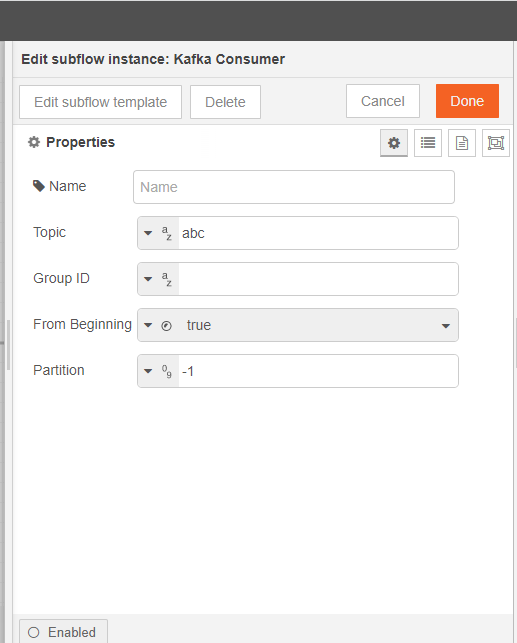

2. Consumer

- It consume messages from Kafka. This action will consume messages from the brokers.

Following are the parameters which are required for initialization of Consumer Connector and below is their respective Description

Name : Give a valid name.

Group ID

default: ignitekafka(uuid)

From Beginning

default: true

Partition

- default: -1

- Warning You must set to a number or -1. If you leave blank, it will be set to partition 0. -1 will advise to consume all messages for all partitions.

Key

When a message is consumed, the payload will be outputted.

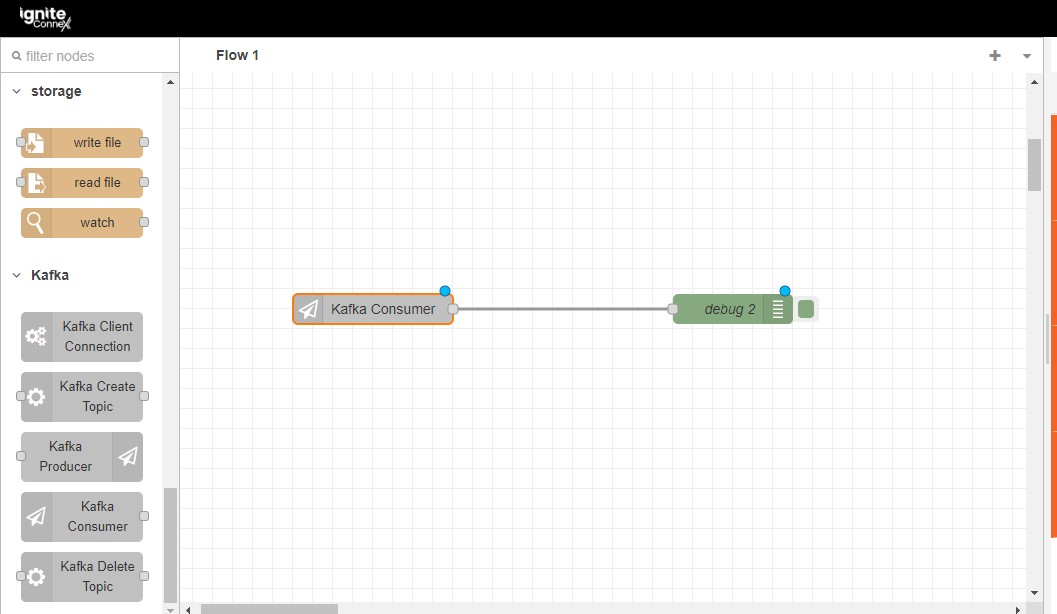

On successful deployment Flow will look like this

3. Create Topic

- This action will create topics to Kafka.

The following properties are required to deploy Create Connector and below is their respective description.

Transaction Timeout

- default: 3000This requires the input to be a JSON object. Partitions is optional.

Disclaimer: If the topic already exists, the node will log an error and not output.

After giving valid name and satisfying all the properties, successful node flow is created and it will look like this

4. Delete Topic

- This action will delete topics from Kafka.

The following properties are required to deploy Delete Connector and below is their respective description.

- Transaction Timeout

- default: 3000

This requires the input to be a JSON object.

Disclaimer: If the topic already exists, the node will log an error and not output.

After giving valid name and satisfying all the properties, successful node flow is created and it will look like this

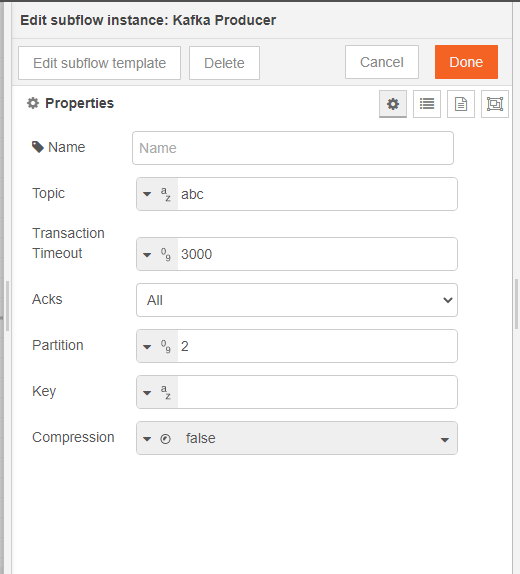

5. Producer

- This action will push messages to Kafka.

The following properties are required to deploy successful Producer connector and below is their respective description.

- Topic

- Transaction Timeout

- default: 3000

- Ack

- All (default)

- None

- Leader.

- Auto Topic Create

- default: false

- Partiion

- Warning if you do not specify a partition, default is to round robin the partitions.

- Key

- Compression

- default: falseIf enabled, compression type will be GZIP.

Warning

- The Producer will push the message in its raw state as a string. Therefore, you must stringify JSON, system-sensitive characters, etc.

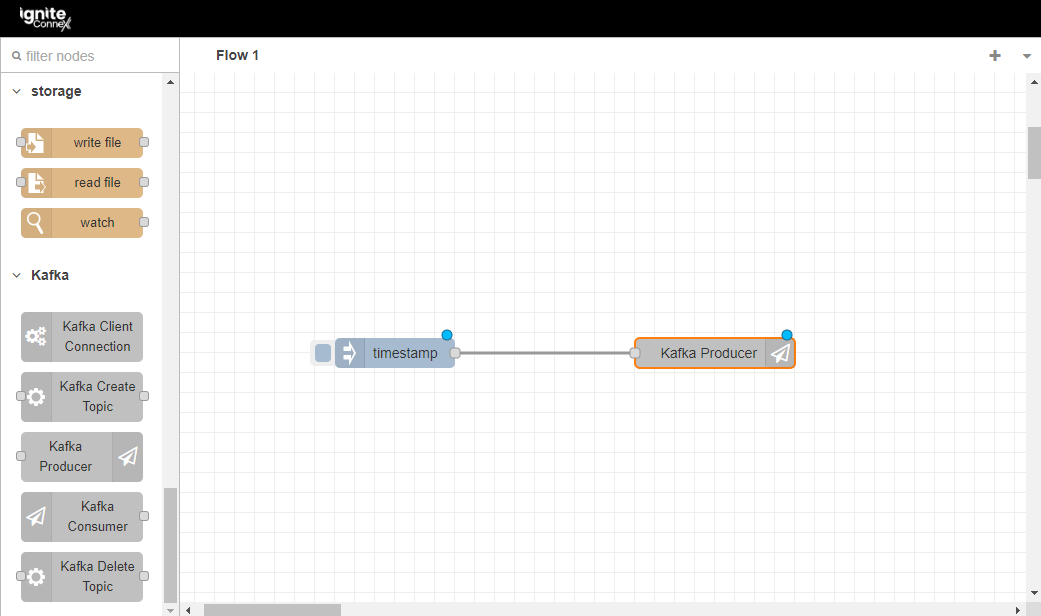

After giving valid parameters, successful node flow is created and it will look like this

Conclusion

Kafka Connectors provide an invaluable tool for connecting different services and systems together, allowing for data streaming across multiple systems. By implementing Kafka Connectors, businesses can benefit from seamless data integration and communication between different systems, leading to better data management, improved productivity and performance, and ultimately, better user experience. Kafka Connectors have a wide variety of use cases, such as data streaming between databases, webhooks, message queues, and many more. Furthermore, Kafka Connectors are highly customizable and allow for easy scalability, meaning businesses can easily adapt their connectors to meet the changing needs of their services and systems. Overall, Kafka Connectors are an effective and efficient way of streamlining data integration and communication. It is an invaluable tool for businesses looking to make the most of their data.